Let’s take a look at a problem I worked on recently trying to merge mesh objects and volumetric shader SDFs, breaking it down by problem and solution.

The Problem

For a previous company, we had a 3d-printer slicing software that worked with meshes (STLs, etc as most all of them do). As a new feature we wanted the ability to add infills for lightweighting without strength loss, as well as control of a proprietary advanced materials property. Infills are expensive to generate and render as meshes, but easy to render as Implicit Models (such as volumetric SDFs for raymarched shaders). You can combine SDFs (signed distance fields) of boundary objects like spheres and cubes with SDFs of fills like gyroids or octects fairly easily, but there is little to be found with merging the implicit volumetric shader raymarching world of SDFs and the triangulated world of meshes. These environments are systematically separated by the CPU and GPU hardware divide.

The Problem: Meshes and SDFs don’t work well together, particularly the problem of defining a boundary of a surface mesh, and the infill pattern such as a gyroid or octet beams.

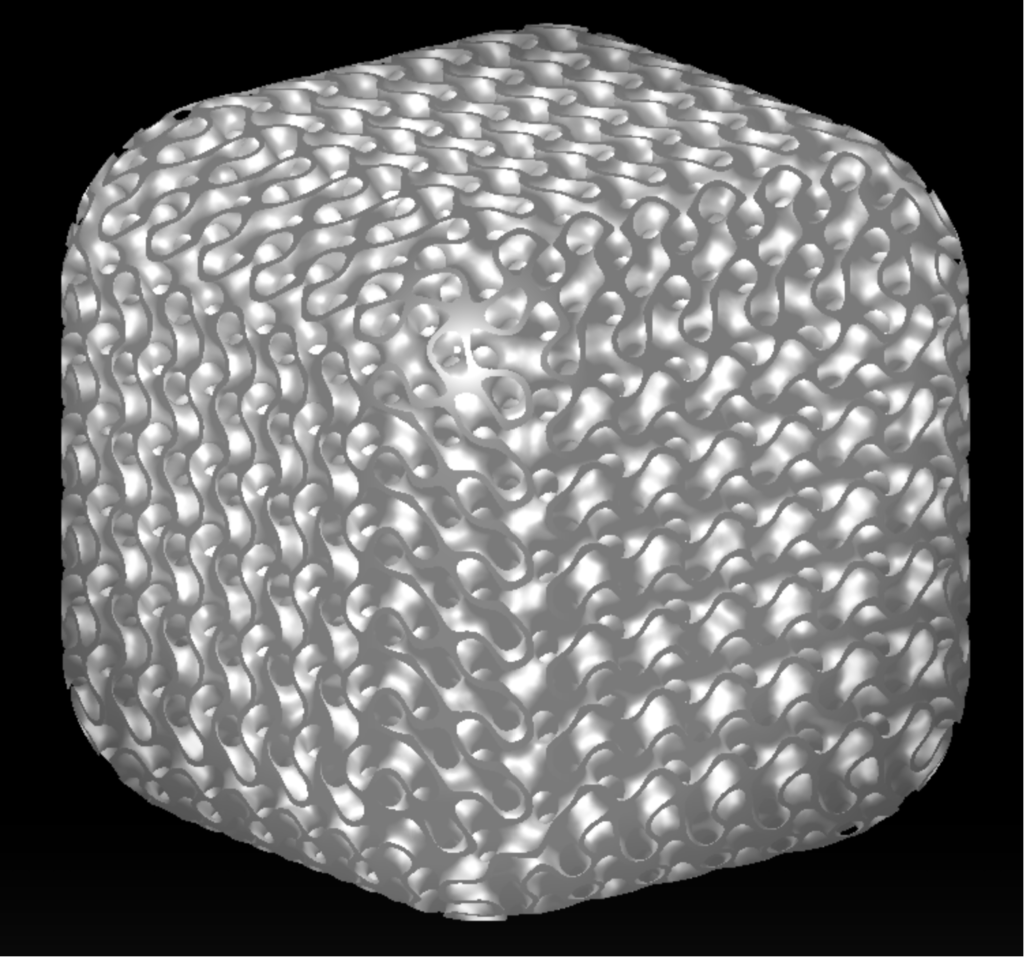

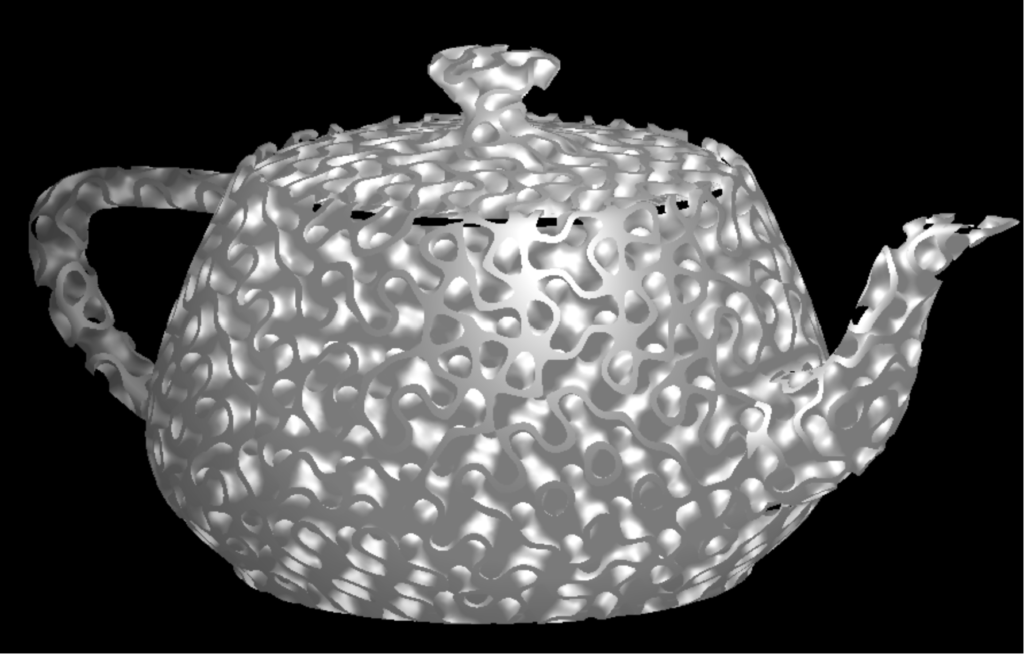

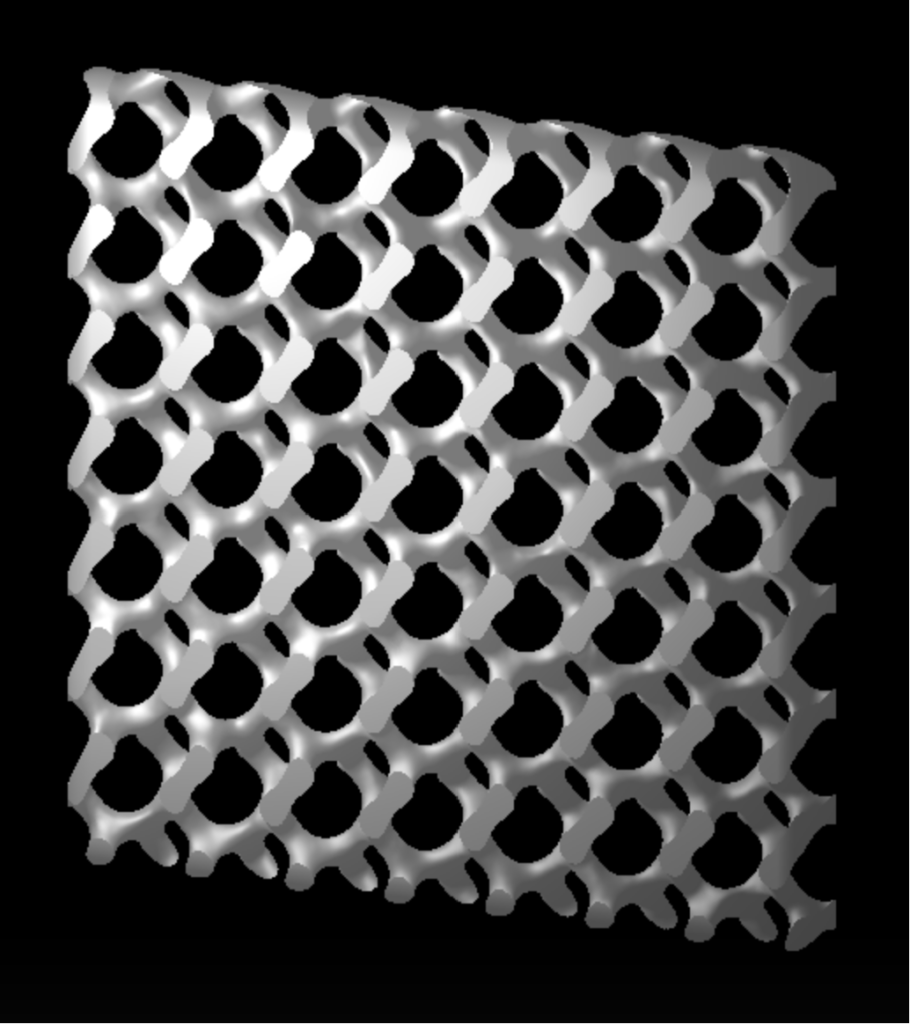

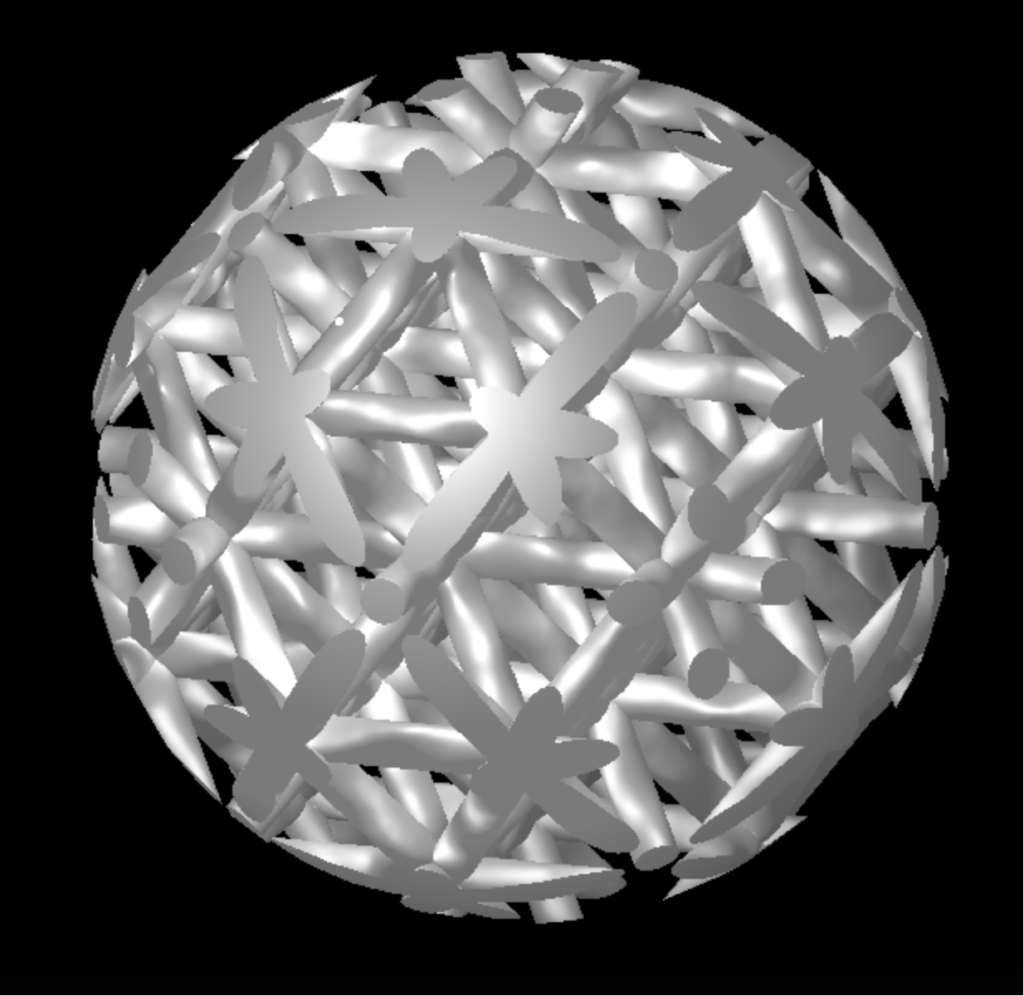

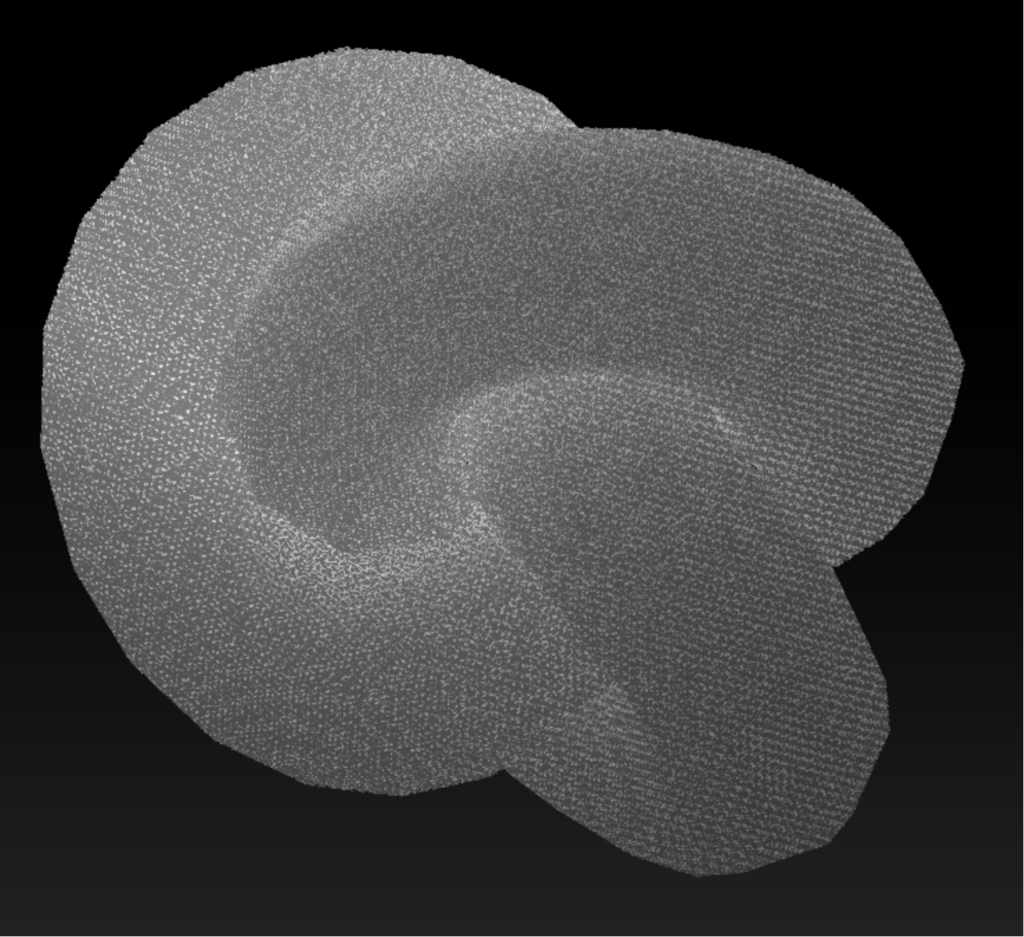

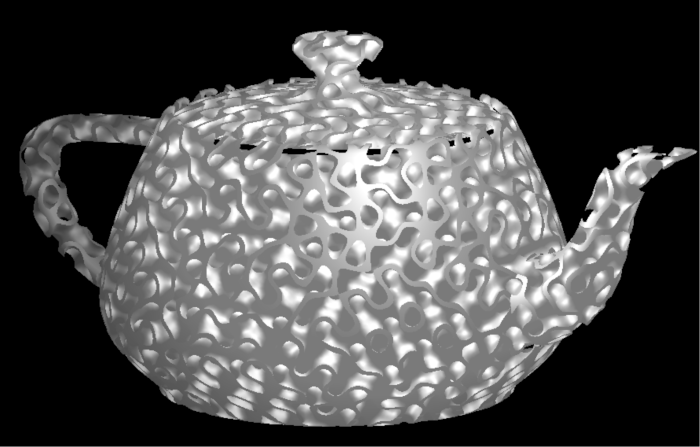

(Gyroid Fill – w/ Cube, Solid Mesh of Teapot, and Gyroid Fill Zoomed In – 2x2x2 Unit Cells)

The Solution

In order to merge our mesh with the implicit infill, I extended our mesh scene with a custom shader material (in Three.JS) that contains information of a second scene, this time as a raymarched fragment shader (implicit). The key here was to set up the shader uniforms such that any change to the first scene was exactly updated to the second scene.

Next, I set up the renderer to do multiple passes per render draw, with the first render passes writing to render targets that store depth information about the entry and exit points of the mesh from each pixel in the camera’s perspective. This allows to know not only the depth of each fragment’s first hit, but also the exit of that fragment hit from the mesh and multiple subsequent entrances and exits. This method is known as Depth Peeling and is described by a famous Nvidia paper using that name. (source: https://developer.download.nvidia.com/SDK/10/opengl/src/dual_depth_peeling/doc/DualDepthPeeling.pdf)

Passing the textures of those render targets into the final draw render allows us to construct a cheap SDF of the mesh itself, without having to cheap all the millions of triangles of the mesh for each fragment. This representation can then be easily combined with other SDFs such as the gyroid fill described before in a standard volumetric shader raymarcher.

The beauty of implicit geometry is also that you can render very fine repeating detail with no performance loss, such as millions of tiny unit cells. This solution is efficient and effective for visual representation of the model on the screen for editing and previews to the user.

For slicing itself, another method was employed using WebGL stencil buffers, but I cannot discuss that here. The Solution: Utilize Depth Peeling algorithms to create a cheap SDF of the mesh, which can then be used in a raymarching scene that is identical to the mesh scene.

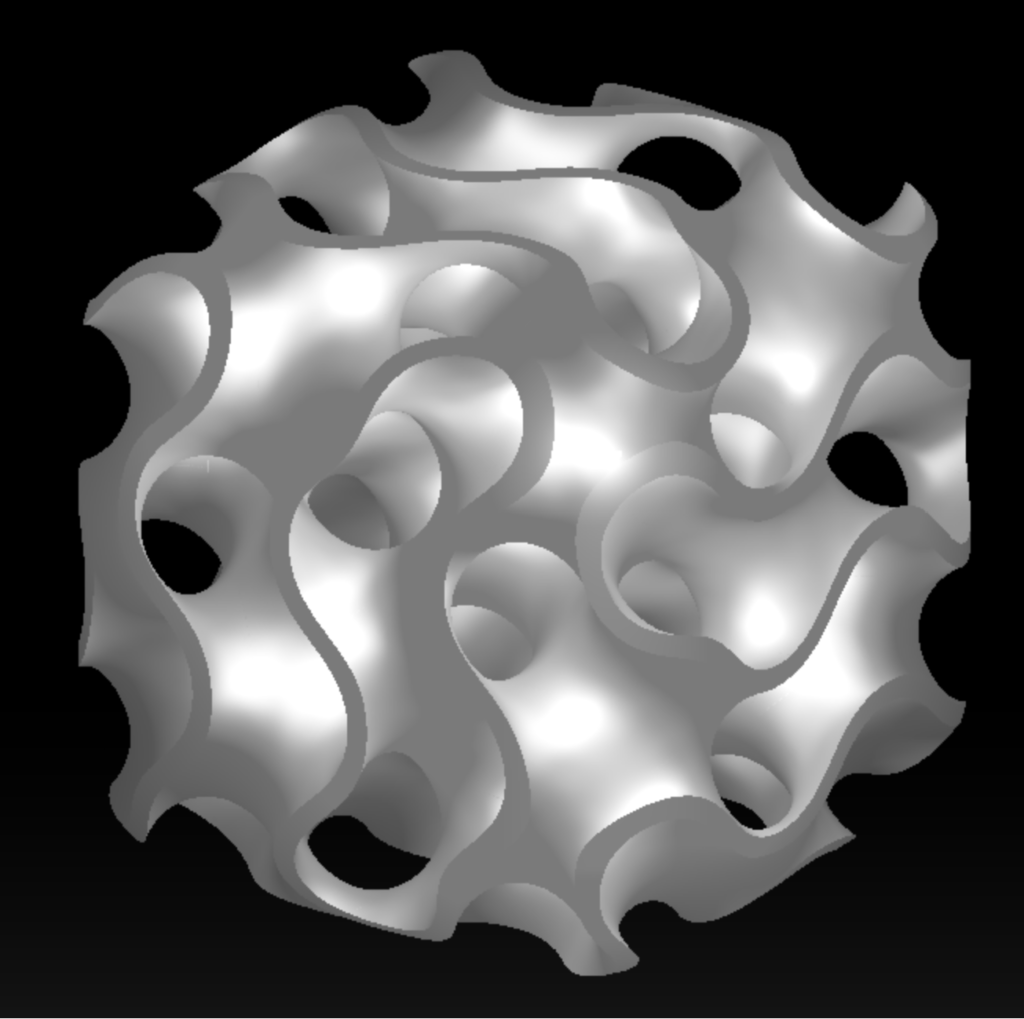

(Thin Wall of Gyroid Beams, Sphere w/ Octet Fill, Torus Knot w/ Fine Gyroid Fill – No Performance Loss)

Thanks for following along! To run a live demo of this, you can use the following link:

LIVE DEMO

If you want to learn more about these topics for your project, or want to hire me on a freelance/contract basis to implement optimizations like this and others in your web or desktop graphics projects, please browse the rest of this website and contact me for details.

Disclaimer: Images and demo are from a personal replication of this specific feature, not from company materials. They are not representative of my full professional quality standards, rather just intended to communicate a process

No responses yet